Freznel AI

AI Democratization

Research. Bridging AI disparity due to high capex & opex, chips scaracity, high power conumption and fragmented software stack.

What we do

Freznel AI provides 'Vertical AI Inference stack' through the full AI value chain.

We provide end-to-end integrated solution, customisable at every layer for enterprises, developers and individual customers.

• Compute Layer – GPUs, TPUs, custom silicon, data centers

• Inference Layer – Kernels, SIMD, NEON, SPV

• Model Layer – Foundation models (LLMs, vision, multimodal)

• Platform Layer – SDKs, orchestration, inference engines

• Application Layer – Vertical AI products (healthcare, finance, coding, gaming, retail, etc.)

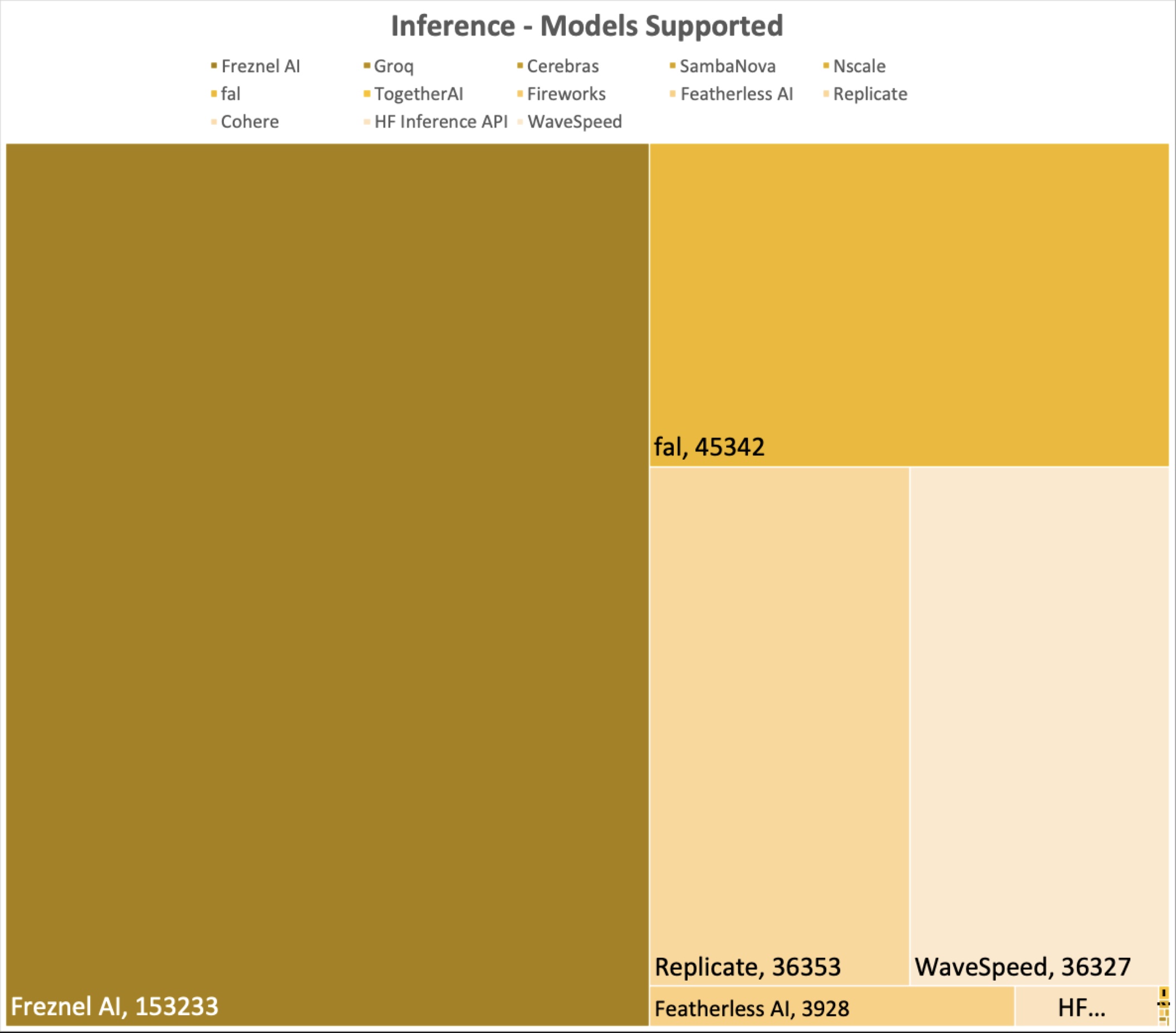

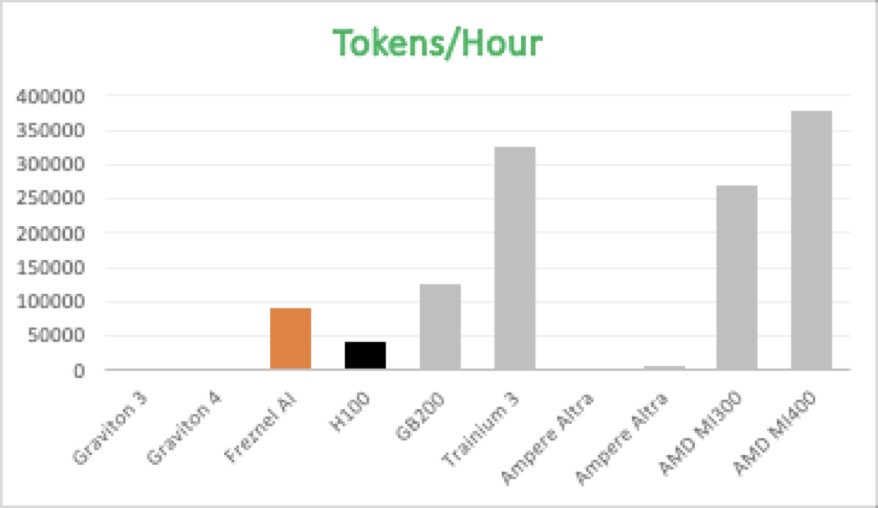

Freznel AI in numbers

Freznel AI Vertical AI inference stack can• Run on ARM CPU+GPU

• Run on ARM CPU+NVIDIA GPU

• Run on MI450 (AMD CPU)+NVIDIA GPU

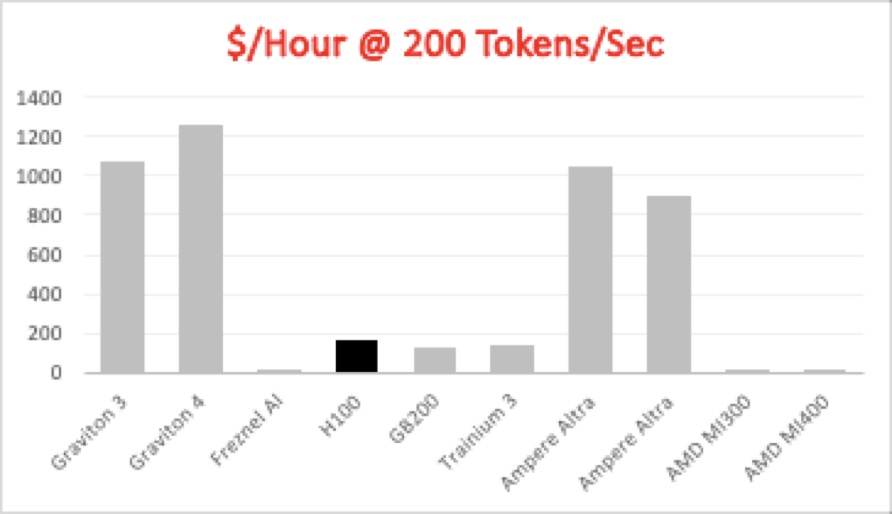

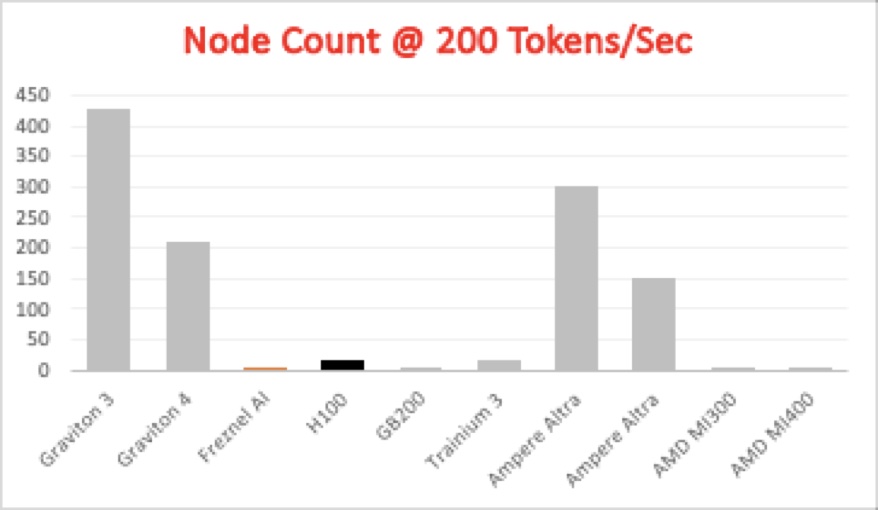

Our technology enables use of CPU and GPU in tandem rather than diverting all inference to GPU

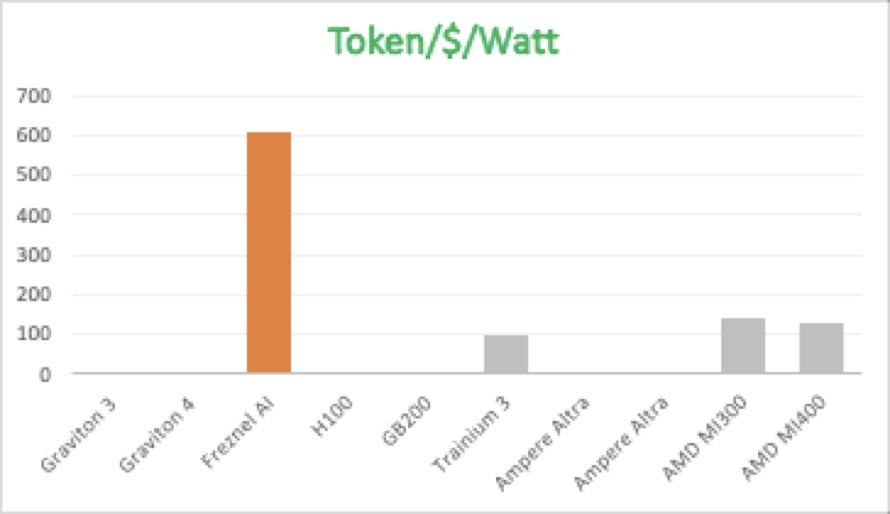

Sustainable AI

Economically and Ecologically sustainable AI.

• We lower the watt/1M token significantly. This reduces electrical power and cololing needs.

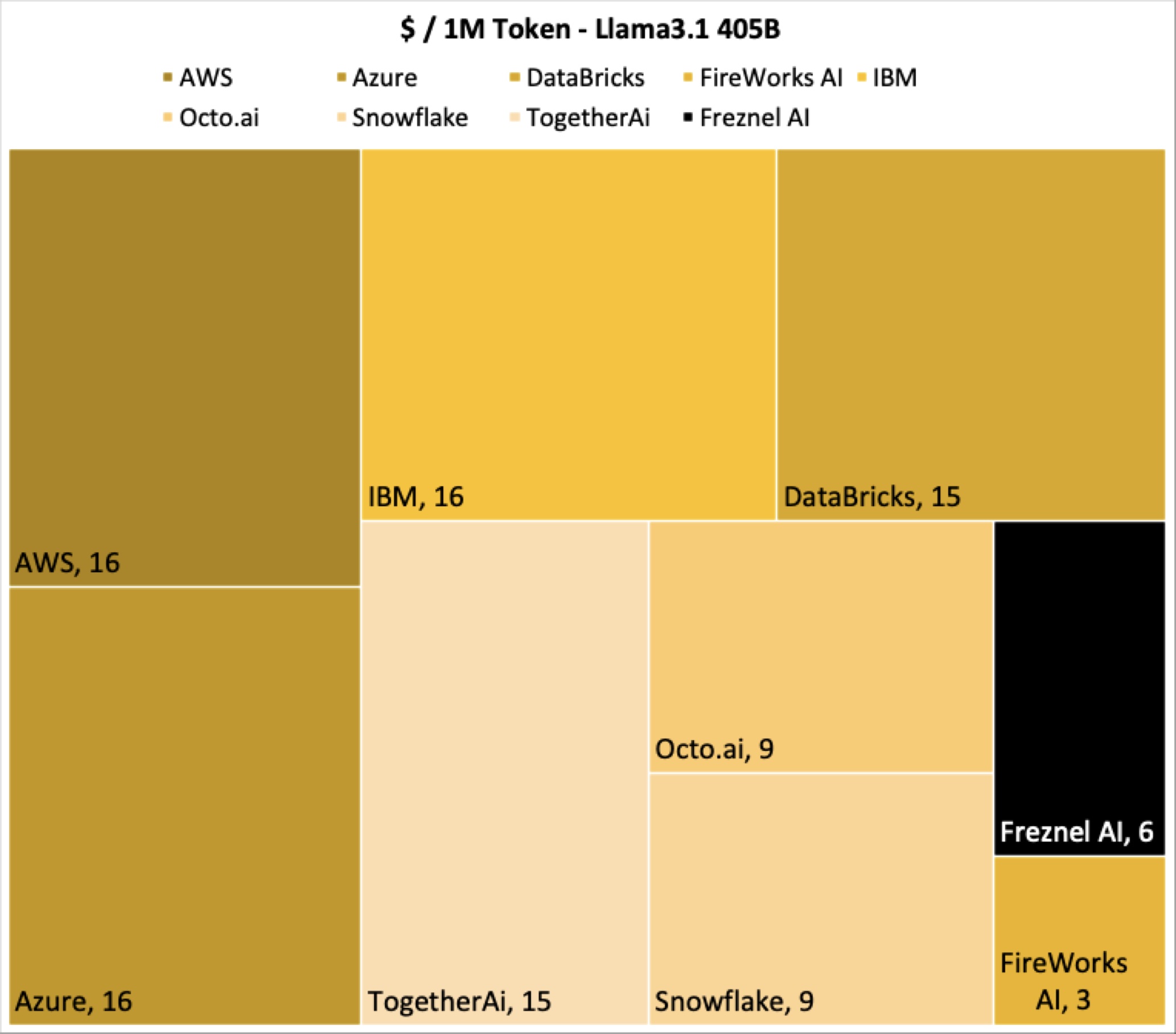

• We reduce Capx and Opex, lowering $/1M token

• Our design removes memory bottlenecks, increasing 1M token/sec/$

• Our design inherently offers privacy and GDPR compliance

• We improve business viabilty with positive economics

• Hardwares we support are not under the purview of CHIPS Act and all countries can host a server farm

Solution Maturity

Alpha tested. Beta ongoing with our live App on Mac & iOS stores, Windows store.

Commercial product announcment soon!